By Nicole A. Lamparello, MD and Molly Somberg, MD, MPA

By Nicole A. Lamparello, MD and Molly Somberg, MD, MPA

Peer Reviewed

Please enjoy this post from the archives dated February 27, 2014

You hear it wherever you eat, whether at the deli ordering a …

By Nicole A. Lamparello, MD and Molly Somberg, MD, MPA

By Nicole A. Lamparello, MD and Molly Somberg, MD, MPA

Peer Reviewed

Please enjoy this post from the archives dated February 27, 2014

You hear it wherever you eat, whether at the deli ordering a …

Please enjoy this post from the archives dated February 5, 2014

Please enjoy this post from the archives dated February 5, 2014

By Matthew A. Haber

Peer Reviewed

The following is a hypothetical example of a classic exam question that one might come across as a medical student:

A 50-year-old male presents to the emergency department with …

Please enjoy this post from the archives, dated January 29, 2014

By Gregory Katz, MD

By Gregory Katz, MD

Peer Reviewed

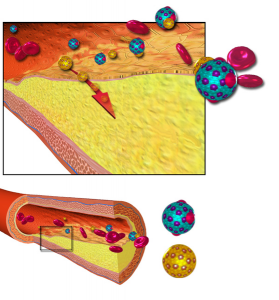

As levels of HDL cholesterol increase, rates of heart disease go down. It’s this fact that has given HDL …

Please enjoy this post from the archives, date January 15, 2014

By Robert Mocharla, MD

Peer Reviewed

No. Sorry. Despite such reasonable excuses as – “I forgot my iPod”, “It’s pouring rain”, or “Game of Thrones …

Please enjoy this post from the archives dated January 10, 2014

By Shivani K Patel, MD

By Shivani K Patel, MD

Peer reviewed

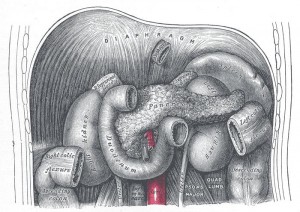

A 61-year old male with chronic epigastric discomfort presented to the emergency room with severe abdominal pain radiating …

Please enjoy this post from the archives, dated November 20, 2013

By Fernando Franco Cuadrado, MD, Julia Hyland Bruno, MD and Mark D. Schwartz, MD

Faculty Peer Reviewed

When flu season returns, we will all see …

Please enjoy this post from the archives dated November 8, 2013

By Gregory Katz, MD

Faculty Peer Reviewed

Everyday in clinic, we tell our patients to choose foods low in saturated fat. Because these foods raise …

Please enjoy this post from the archives dated October 30, 2013

By Robert Joseph Fakheri, MD

Faculty Peer Reviewed

A 55 year-old male is recently diagnosed with systemic sarcoidosis. The patient is started on prednisone 40mg …