By Jessica K Qiu

By Jessica K Qiu

Peer Reviewed

In 1998, there were 34 million adults aged 65 years or older in the US.1 By 2030, that number is expected to double.1 This dramatic increase …

By Jessica K Qiu

By Jessica K Qiu

Peer Reviewed

In 1998, there were 34 million adults aged 65 years or older in the US.1 By 2030, that number is expected to double.1 This dramatic increase …

By Matthew Vorsanger MD, David Kudlowitz MD, and Patrick Cocks MD

By Matthew Vorsanger MD, David Kudlowitz MD, and Patrick Cocks MD

Peer Reviewed

Learning objectives:

1. Describe the pathophysiology and clinical features of G6PD Deficiency.

2. Discuss susceptibility to favism.

3. Give a brief historical perspective …

By John Hwang MD, Cindy Fang MD, Neil Shapiro MD, Marty Fried MD || Illustration by Michael Shen MD || Audio …

By Hannah Friedman

By Hannah Friedman

Peer Reviewed

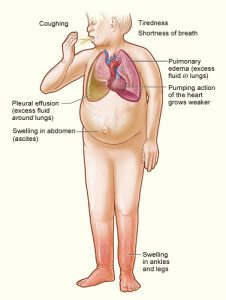

It is a commonly seen scenario on the wards: a patient with a past medical history of heart failure and stage 4 chronic kidney disease presents with progressive …

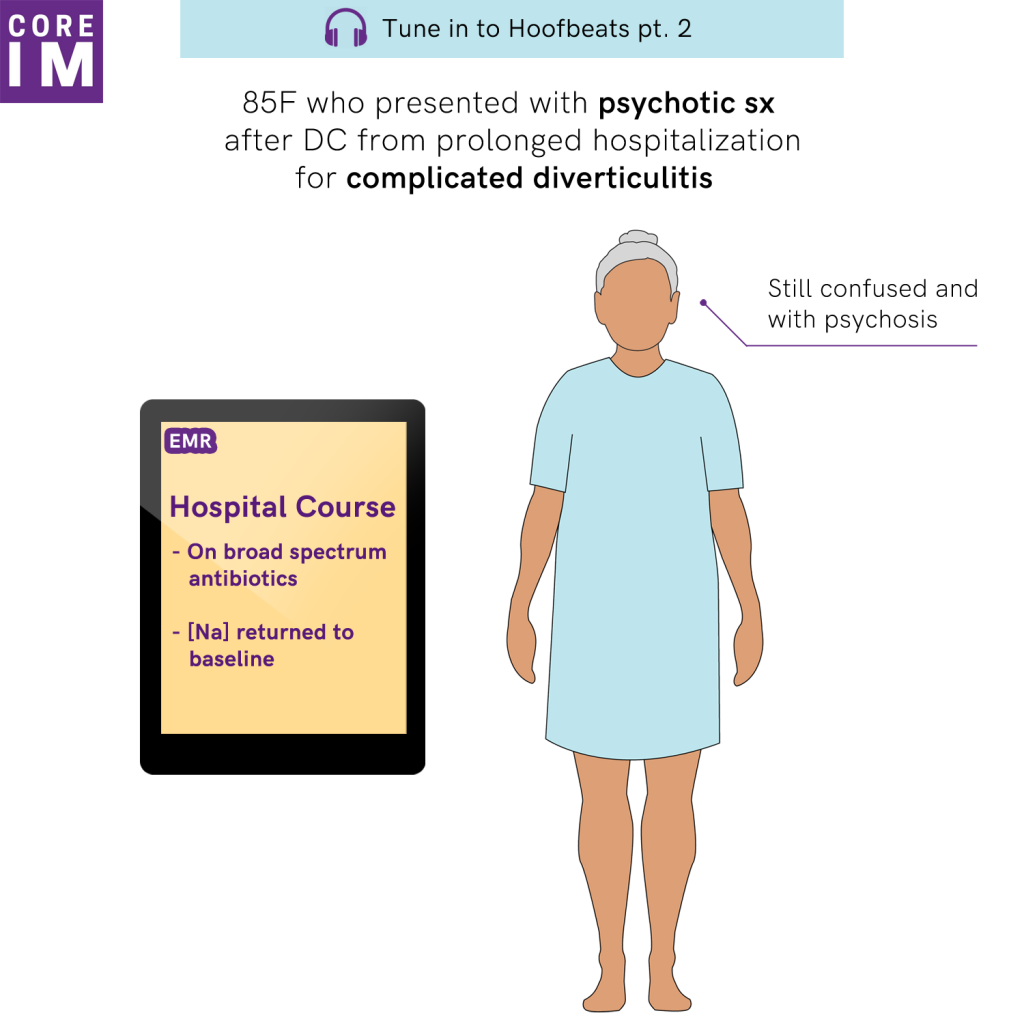

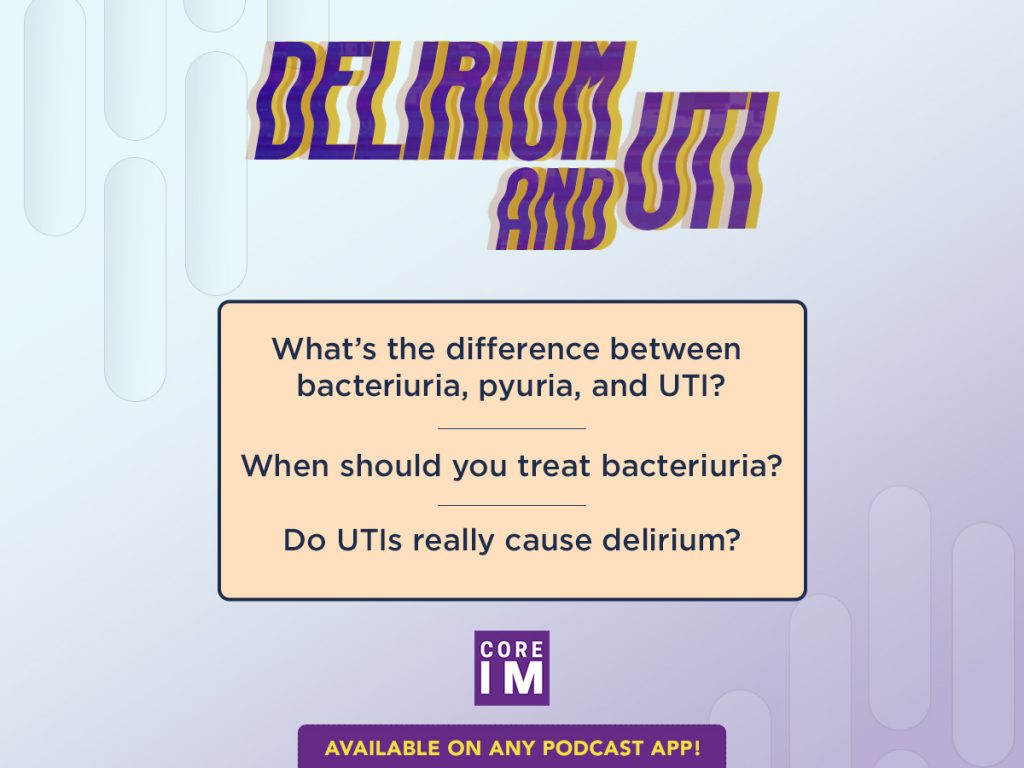

Join us in this episode as we question everything you ever thought you knew about… urinary tract infections (UTI) and delirium. || By Steven R. Liu MD, …

Join us in this episode as we question everything you ever thought you knew about… urinary tract infections (UTI) and delirium. || By Steven R. Liu MD, …

By Dixon Yang, MD

By Dixon Yang, MD

Peer ReviewedÂ

AbstractÂ

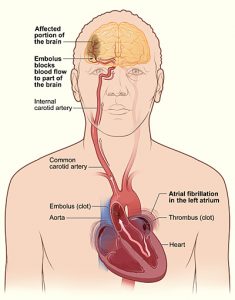

Atrial fibrillation (AF) is a common arrhythmia, especially in the elderly, and is often asymptomatic. However, absence of symptoms does not confer better prognosis. Many patients with AF …

By Monil Shah, MD and Arun Manmadhan, MD

By Monil Shah, MD and Arun Manmadhan, MD

Peer Reviewed

A 64-year old male with a history of hypertension, dyslipidemia, and uncontrolled diabetes is brought to the emergency room with new …

By Christopher Sonne, MD

By Christopher Sonne, MD

Peer reviewed

Learning Objectives:

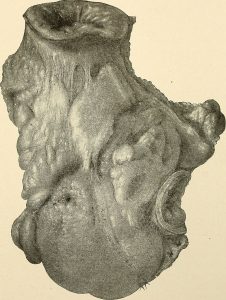

1. Understand the pathophysiology of peritonitis secondary to bowel perforation.

2. Understand how classic findings of peritonitis can be absent in some …