By Brit Trogen

By Brit Trogen

Peer Reviewed

In 2001, the Institute of Medicine’s Crossing the Quality Chasm became the seminal paper recognizing patient-centered care as a crucial component of overall health care quality.[1] Since then, patient …

By Brit Trogen

By Brit Trogen

Peer Reviewed

In 2001, the Institute of Medicine’s Crossing the Quality Chasm became the seminal paper recognizing patient-centered care as a crucial component of overall health care quality.[1] Since then, patient …

By Dixon Yang, MD

By Dixon Yang, MD

Peer Reviewed

Abstract

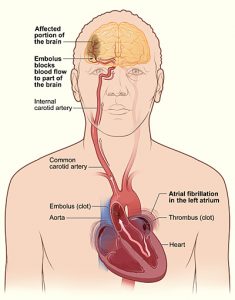

Atrial fibrillation (AF) is a common arrhythmia, especially in the elderly, and is often asymptomatic. However, absence of symptoms does not confer better prognosis. Many patients with AF present with stroke …

By Monil Shah, MD and Arun Manmadhan, MD

By Monil Shah, MD and Arun Manmadhan, MD

Peer Reviewed

A 64-year old male with a history of hypertension, dyslipidemia, and uncontrolled diabetes is brought to the emergency room with new onset substernal chest …

By Daniela Rebollo Salazar

By Daniela Rebollo Salazar

Peer Reviewed

In the past ten years, the number of bacterial pathogens resistant to multiple antibiotics has dramatically increased. The emergence of resistant microorganisms is a direct product of the …

By Thatcher Heumann, MD

By Thatcher Heumann, MD

Peer Reviewed

“Rapid Response Team to 7W. Rapid Response Team to 7W.” After switching elevators and waiting for security to buzz you in through the double doors, you …

By Scott Statman, MD

By Scott Statman, MD

Peer Reviewed

There is little doubt that an association between asthma and gastroesophageal reflux disease (GERD) exists. However clinicians have debated the nature of this relationship for decades. Asthma and GERD are among …

By Vishal Shah, MD

By Vishal Shah, MD

Peer Reviewed

Nonsteroidal antiinflammatory drugs (NSAIDs) are a heterogenous group of non-opioid analgesics and anti-inflammatory agents. Their use is ubiquitous, from treating a simple tension headache to a sprained ankle. NSAIDs are …

By Calvin Ngai, MD

By Calvin Ngai, MD

Peer Reviewed

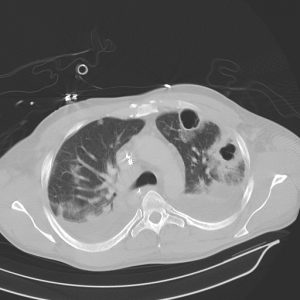

A 71-year-old Caucasian woman with hypertension presented with a 2-day history of productive cough and fever. She was living alone and had no history of any recent hospitalizations. On …