By Raymond Barry

By Raymond Barry

Peer Reviewed

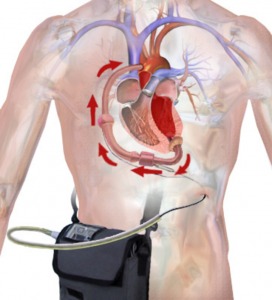

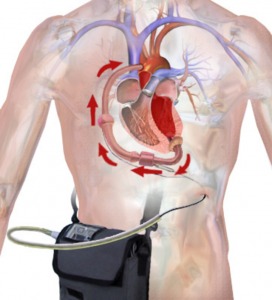

A 2020 report published by the American Heart Association (AHA) in conjunction with the National Institutes of Health (NIH) found that an estimated 6.2 million American adults had heart …

By Raymond Barry

By Raymond Barry

Peer Reviewed

A 2020 report published by the American Heart Association (AHA) in conjunction with the National Institutes of Health (NIH) found that an estimated 6.2 million American adults had heart …

By: Michael Moore

By: Michael Moore

Peer Reviewed

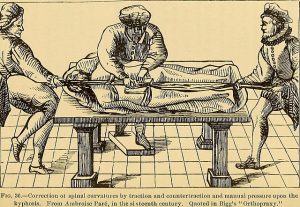

“Too many complex back surgeries are being performed and patients are suffering as a result†wrote National Public Radio health science journalist Joanne Silberner in her 2010 article “Surgery May …

By Kathryn Hockemeyer

By Kathryn Hockemeyer

Peer Reviewed

I caught up with a friend who works in environmental, social, and corporate governance investing during a lull in the COVID-19 pandemic. Seconds into the conversation, he asked, …

By Akshay N. Pulavarty MPH

By Akshay N. Pulavarty MPH

Peer Reviewed

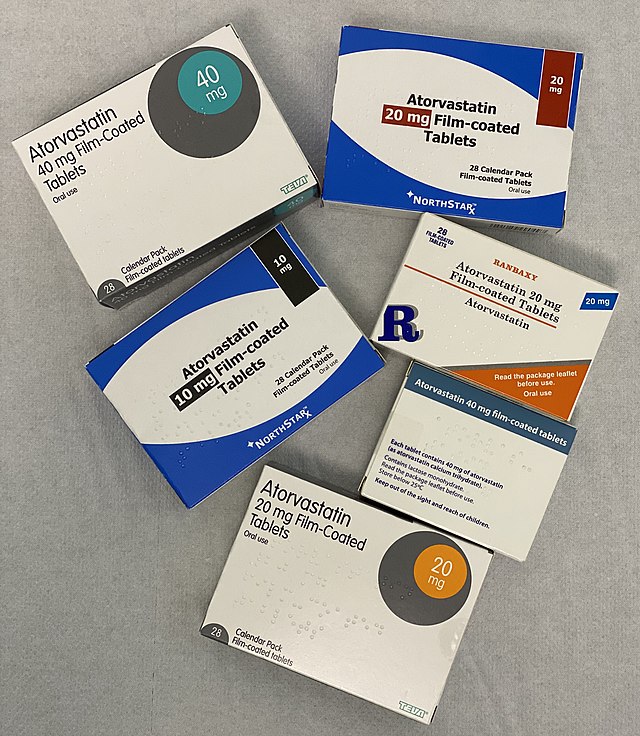

A quick look into the medicine cabinet of anyone sixty or older will likely reveal a statin. Primary prevention with high-intensity statins has substantially reduced the …

By Mahip Grewal

By Mahip Grewal

Peer Reviewed

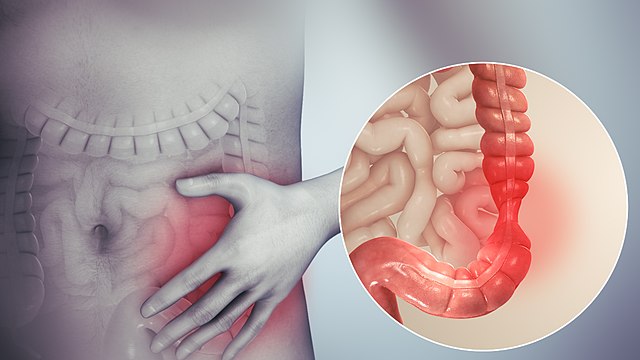

Nonalcoholic fatty liver disease (NAFLD) is estimated to affect 25% of the world’s population.1 NAFLD is a spectrum of disease ranging from nonalcoholic fatty liver (NAFL), which is …

By Matthew Haller

By Matthew Haller

Peer ReviewedÂ

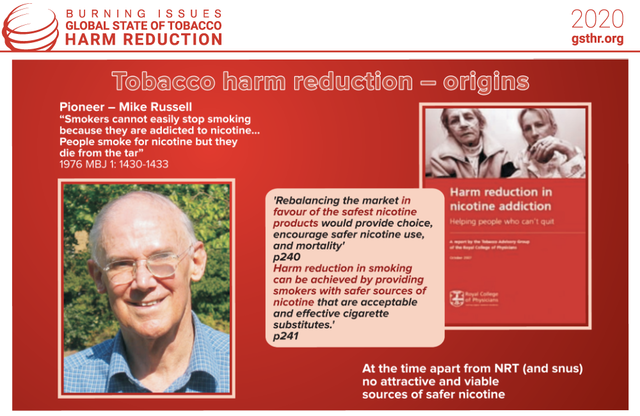

The default mode of medical practice is curative: pursuit of the complete eradication of the malady at hand, leading to symptomatic relief and prolongation of life. In actuality, …

By Devon Zander

By Devon Zander

Peer Reviewed

Much has been said about the art of taking a patient history. The aphorism most commonly invoked by medical educators is that “80% of diagnoses can be made …

By Quinn Silverglate

By Quinn Silverglate

Peer Reviewed

At any of the over 26,000 restaurants that populate the city of New York, I have to ask the same questions: “Does this have dairy in it? …