By William Plowe

By William Plowe

Peer Reviewed

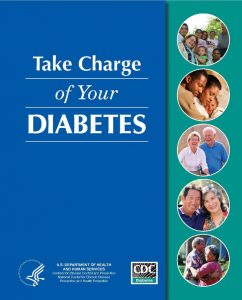

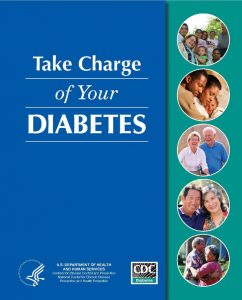

Metformin has been the first-line drug in type 2 diabetes for over a decade, but its possible benefit in type 1 diabetes (DM1) is still a matter of study. …

By William Plowe

By William Plowe

Peer Reviewed

Metformin has been the first-line drug in type 2 diabetes for over a decade, but its possible benefit in type 1 diabetes (DM1) is still a matter of study. …

By Kevin Rezzadeh

By Kevin Rezzadeh

Peer Reviewed

Injuries associated with amateur boxing include facial lacerations, hand injuries, and bruised ribs.1 While many of the superficial wounds and bone fractures can completely heal, brain …

By Jessica K Qiu

By Jessica K Qiu

Peer Reviewed

In 1998, there were 34 million adults aged 65 years or older in the US.1 By 2030, that number is expected to double.1 This dramatic …

By Sophia Chen

By Sophia Chen

Peer Reviewed

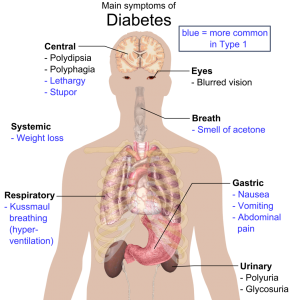

Once considered a disease of the West, type 2 diabetes mellitus is now a global epidemic whose incidence correlates with economic growth. This is evident in Asian countries …

By David Pineles, MD

By David Pineles, MD

Peer Reviewed

You are a third-year internal medicine resident finishing your night shift at John Doe Hospital. Your shift so far was challenging to say …

By Kurtis Carlock, MD

By Kurtis Carlock, MD

Peer Reviewed

Obesity is a problem that affects over one-third of adults in the US and increases the risk of numerous health problems in these individuals.[1]Â The question of how best to combat obesity is a problem that has …

By Hannah Friedman

By Hannah Friedman

Peer Reviewed

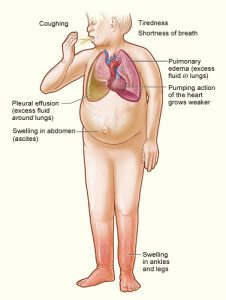

It is a commonly seen scenario on the wards: a patient with a past medical history of heart failure and stage 4 chronic kidney disease presents with progressive …

By Nicolas Gillingham

By Nicolas Gillingham

Peer Reviewed

Over 30 million Americans—9.4% of the population—live with diabetes, six million of whom are at least partially dependent on exogenous insulin.[1] Insulin can be …