By Hannah Kopinsky, MD

By Hannah Kopinsky, MD

Peer Reviewed

Appendicitis is the most common reason for urgent surgery related to abdominal pain in the US, with a lifetime incidence of 8.6% for men and 6.7% for women.1 The …

By Hannah Kopinsky, MD

By Hannah Kopinsky, MD

Peer Reviewed

Appendicitis is the most common reason for urgent surgery related to abdominal pain in the US, with a lifetime incidence of 8.6% for men and 6.7% for women.1 The …

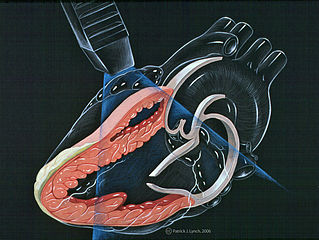

By Pamela Boodram, MD

By Pamela Boodram, MD

Peer Reviewed

A 68-year-old woman with a history of hypertension and well controlled type 2 diabetes presents to the ED with five days of progressively worsening dyspnea on exertion, orthopnea, and …

By Avani Kolla

By Avani Kolla

Peer Reviewed

During my trip to India, the “family bonding†reached a new level when I shared my upper respiratory infection with my parents and sister. On day two of …

By Daniel Gratch, MD

By Daniel Gratch, MD

Peer Reviewed

In 150 AD, Greek physician and philosopher Galen wrote of a woman suffering from insomnia: “I was convinced the woman was afflicted not by a bodily disease, …

By Elana Kreiger-Benson

By Elana Kreiger-Benson

Peer Reviewed

“I’m not actually planning to try it,†the patient whispered to me while I was feeling her radial pulses. We had just finished an extensive conversation with her …

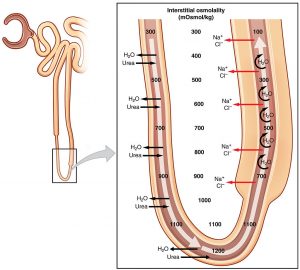

By Austin Cheng, MD

By Austin Cheng, MD

Peer Reviewed

As a resident in internal medicine, hearing the words ‘Loop of Henle’ brings back memories from early medical school of complex diagrams of anatomy, ion …

By Laura McLaughlin

By Laura McLaughlin

Peer Reviewed

In the United States, a third of people on dialysis for kidney failure are African American, yet this population comprises only 13% of the US population.1 …

By Scarlett Murphy, MD

By Scarlett Murphy, MD

Peer Reviewed

We are all too aware of the dreaded indwelling Foley catheter and the complications it invites. We know that its smooth, plastic surface …