By Karen McCloskey, MD

By Karen McCloskey, MD

Peer Reviewed

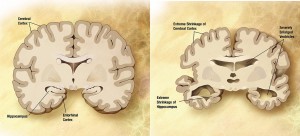

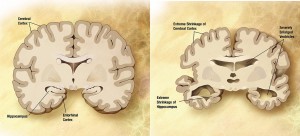

In May 2012, The U.S. Department of Health and Human Services unveiled the “National Plan to Address Alzheimer’s Disease,†in response to legislation signed by President Obama in …

By Karen McCloskey, MD

By Karen McCloskey, MD

Peer Reviewed

In May 2012, The U.S. Department of Health and Human Services unveiled the “National Plan to Address Alzheimer’s Disease,†in response to legislation signed by President Obama in …

By Eric Jeffrey Nisenbaum, MD

By Eric Jeffrey Nisenbaum, MD

Peer Reviewed

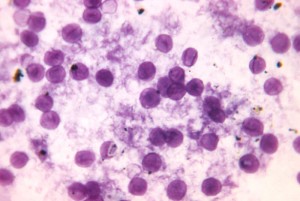

Mr. O is a 93-year-old man with a past medical history notable for severe Alzheimer’s dementia and amputation of the left upper extremity secondary to …

By Maxine Wallis Stachel, MD

By Maxine Wallis Stachel, MD

Peer Reviewed

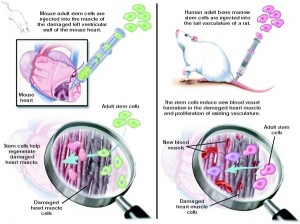

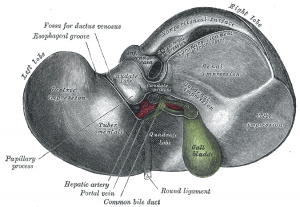

The Scale of the Problem

Despite decades of rigorous data collection, drug research, patient education and evidence-based practice, ischemic heart disease (IHD) and congestive heart failure (CHF) remain …

By Martin Fried, MD

By Martin Fried, MD

Peer reviewed

Learning Objectives

By Andrew Sideris

By Andrew Sideris

Peer Reviewed

Reduction of dietary sodium is a well-known nonpharmacologic therapy to reduce blood pressure. The 8th Joint National Commission (JNC-8) recommends that the general population limit daily …

By Omotayo Arowojolu

By Omotayo Arowojolu

Peer Reviewed

Approximately 32% of American adults have high blood pressure (>140/90 mmHg),1 or hypertension, and only 54% of these individuals have well-controlled hypertension.2,3 Hypertension costs $48.6 billion each year …

By Lauren Christene Strazzulla

By Lauren Christene Strazzulla

Peer Reviewed

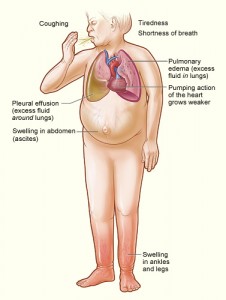

The lifetime risk for developing heart failure from age 55 on is 33% for men and 28.5% for women, and as the population ages, there is an increasing …

By Samantha Kass Newman, MD

By Samantha Kass Newman, MD

Peer Reviewed

Today marks the first publication of the new Spotlight series in Clinical Correlations. This series uses case vignettes to explore diagnosis, pathophysiology, and management of …